先说思路

用requests库下载网页文本文件->用BeautifulSoup筛选出有用的信息->保存

requests库

发送get请求

r = requests.get('https://www.baidu.com')

|

添加参数

data={

'name':'琪露诺',

'age':24

}

header = {

"User-Agent": "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/58.0.3029.110 Safari/537.3",

"Accept-Language": "en-US,en;q=0.9",

"Accept-Encoding": "gzip, deflate",

"Referer": "https://www.google.com",

"Connection": "keep-alive"

}

r = requests.get('https://www.baidu.com',params=data,headers=header)

|

读取内容

response.encoding = 'utf-8'

print(response.text)

|

BeautifulSoup库

实例化BeautifulSoup对象

soup = BeautifulSoup(response.text, 'lxml')

|

筛选需要的信息

items1 = soup.select("body>div.layout.layout--728-250>div.layout-left>div.cc-content.service-area>div.list.clearfix>a")

#返回set对象

输出标签属性的几种方法

for i in items1:

print(i.get('href'))

print(i.get_text)

|

一个简单的例子

from bs4 import BeautifulSoup as bs

import requests

for page in range(0,255,25):

url="https://movie.douban.com/top250?start={0}".format(page)

#print(url)

ua={'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/122.0.0.0 Safari/537.36'}

#get请求

response=requests.get(url,headers=ua)

html_text=response.text

#实例化对象

soup = bs(html_text, 'lxml')

soup_lists=soup.find_all('div', class_='item')

#排名-中文片名-评分-链接

for list in soup_lists:

rank = list.find_all('em')[0].get_text()

link = list.select('div')[0].select('a')[0].get("href")

title = list.select('div')[0].select('a')[0].select('img')[0].get("alt")

score = list.select('div')[1].select('div')[1].select('div')[0].select('span')[1].get_text()

print(rank, title, score, link)

|

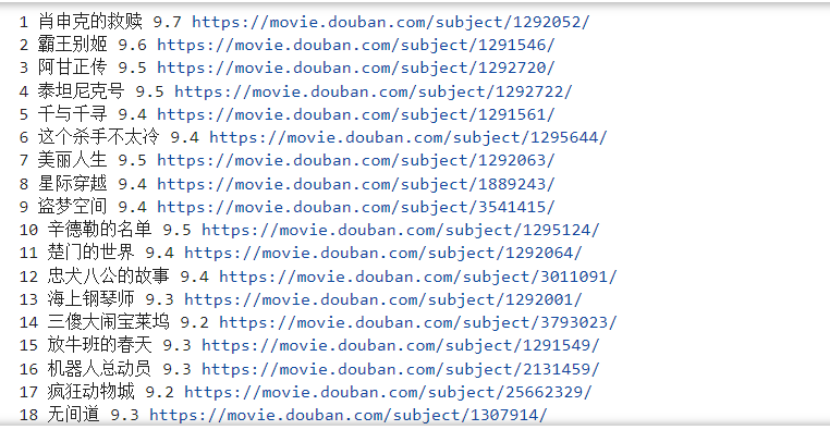

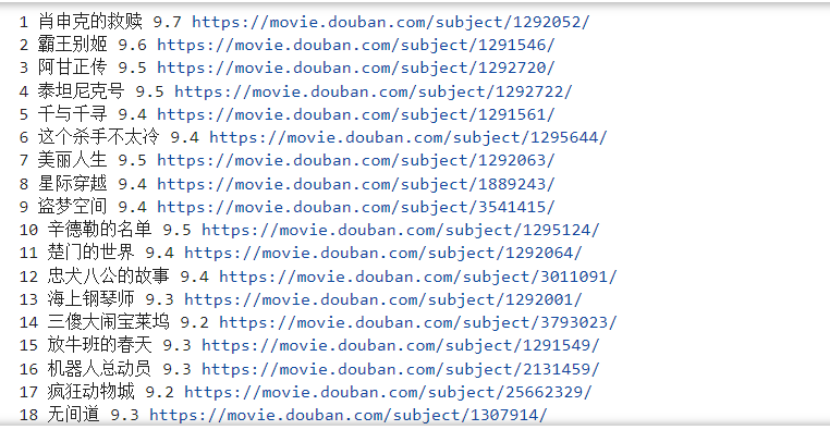

结果如下